Supercomputers Simulate 800,000 Years of California Earthquakes to Pinpoint Risks

Scientists are working to improve their calculations of earthquake danger by combining maps of known faults with the use of supercomputers to simulate potential shaking deep into the future in California.

Massive earthquakes are rare events—and the scarcity of information about them can blind us to their risks, especially when it comes to determining the danger to a specific location or structure.

Scientists are now working to improve the calculations of danger by combining maps and histories of known faults with the use of supercomputers to simulate potential shaking deep into the future in California. The method is described in an article just published in the Bulletin of the Seismological Society of America.

“People always want to know: When is the next ‘Big One’ coming,” said coauthor Bruce Shaw, a seismologist at Columbia University’s Lamont-Doherty Earth Observatory. “Chaos always gets in the way of answering that question. But what we can get at is what will happen when it happens.”

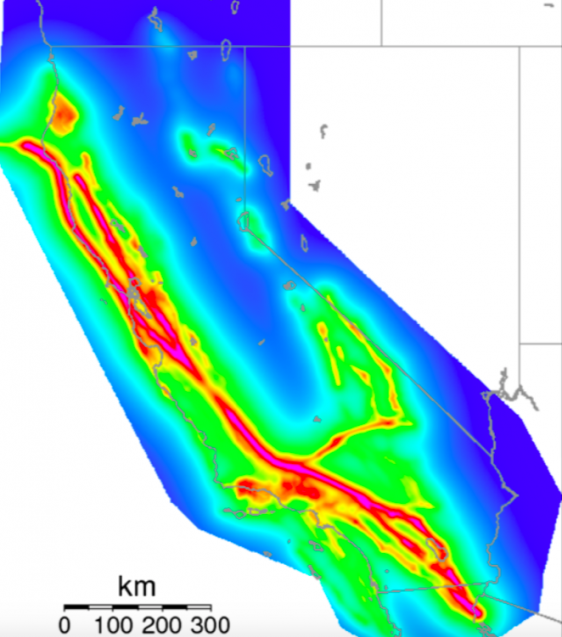

The work is aimed at determining the probability of an earthquake occurring along any of California’s hundreds of earthquake-producing faults, the scale of earthquake that could be expected, and how much shaking it causes.

Southern California has not had a truly big earthquake since 1857, the last time the San Andreas fault broke into a massive magnitude 7.9 earthquake. “We haven’t observed most of the possible events that could cause large damage,” explained Kevin Milner, a computer scientist and seismology researcher at the Southern California Earthquake Center.

One traditional way of getting around this lack of data involves digging trenches across faults to learn more about past ruptures, collating information from many earthquakes around the world, and creating a statistical model of hazard. Alternatively, researchers use supercomputers to simulate a specific earthquake in a specific place. The new framework takes elements of both methodologies to forecast the likelihood and impact of earthquakes over an entire region.

Milner and Shaw’s new study presents results from a prototype of the so-called Rate-State earthquake simulator, or RSQSim, that simulates hundreds of thousands of years of seismicity in California. Coupled with another code, CyberShake, the framework can calculate the amount of shaking that would occur for each quake.

Their results compare well with historical earthquakes and the results of other methods, and display a realistic distribution of earthquake probabilities. According to the developers, the new approach improves the ability to pinpoint how much a given location will shake during an earthquake. This should eventually allow building code developers, architects and structural engineers to design more resilient buildings that can survive earthquakes at a specific site.

“You don’t want to overbuild, because that wastes money. But of course you also don’t want to underbuild,” said Shaw.

RSQSim transforms mathematical representations of the geophysical forces at play in earthquakes—the standard model of how ruptures nucleate and propagate—into algorithms, and then solves them on some of the most powerful supercomputers on the planet. The research was enabled over several years by government-sponsored supercomputers at the Texas Advanced Computing Center, including Frontera, the most powerful system at any university in the world. The researchers also used the Blue Waters system at the National Center for Supercomputing Applications, and the Summit system—the world’s second-most powerful supercomputer—at the Oak Ridge Leadership Computing Facility.

“We’ve made a lot of progress on Frontera in determining what kind of earthquakes we can expect, on which fault, and how often,” said Christine Goulet, executive director for applied science at SCEC, who was also involved in the work. “We don’t prescribe or tell the code when the earthquakes are going to happen. We launch a simulation of hundreds of thousands of years, and just let the code transfer the stress from one fault to another.”

The project is based on the complex geologic structures of California. It simulated over 800,000 virtual years how stresses would form and dissipate as tectonic forces act on the earth. From these simulations, the framework generated a catalog—a record that a virtual earthquake occurred at a certain place with a certain magnitude and attributes at a given time. The outputs of RSQSim were then fed into CyberShake, which again used computer models of geophysics to predict how much shaking, in terms of ground acceleration and duration, would occur as a result of each quake.

“The framework outputs a full slip-time history: where a rupture occurs and how it grew,” Milner said. “We found it produces realistic ground motions, which tells us that the physics implemented in the model is working as intended.”

The team has more work planned to validate the results, before they can be used for design applications. But so far, the researchers have found that the RSQSim framework produces rich, variable earthquakes overall, a sign it is producing reasonable results.

For some sites, the results suggest shaking hazard goes down, relative to state-of-practice estimates made by the U.S. Geological Survey. But for some sites that have special configurations of nearby faults or local geological features, for example near San Bernardino, the hazard went up.

The project is an outgrowth of an earlier study by Shaw, Milner and colleagues that simulated 500,000 years of shaking.

Adapted from a press release by the Texas Advanced Computing Center.